|

FEATURE:

ICPR2006 Keynote2 |

|

Review by: Georg Langs Vienna University of Technology

Professor Lawrence O. Hall is with the AI lab at the University of South Florida. His interests are in machine learning, data mining and the integration of AI into medical imaging. In his talk, he explored the advantages and the often subtle details of using classifier ensembles.

Ensembles of classifiers can be built in a distributed manner and can deal with extremely large data sets. Weighting schemes may provide an approach to deal with heavily skewed data. Applications range from biology where classifiers have to deal with huge protein data banks, to security where instances of cell phone fraud can be indicated by this technique.

The two main approaches to ensemble learning are bagging and boosting. Bagging is a method to improve unstable classifiers, by drawing examples from the training data in a bootstrap manner, thereby decreasing the variance of the predictor. Boosting successively focuses on examples misclassified by previous weak learners, decreasing the model bias. In his talk Hall gave various examples for what should influence the decision for a specific method, or training configuration. In a large study, 5 methods were compared on 57 data sets. The classifier results were assessed by the Friedman-Holmes test based on ranks. Among the conclusions from this study were that boosting and random forests can improve on the accuracy of bagging for approximately 10% of the data sets, and boosting appears to benefit from larger ensemble sizes.

Many questions remain. How big should the ensemble be? What is the best way to vote? How to decide how much minority class data is enough? For now, Hall concluded that distributed learning and classifier ensembles make large and complex data sets manageable, it improves the classification accuracy, and that the Friedman-Holmes test is well suited for comparing them. The very close results in the comparison study triggered two questions from the audience: How relevant is the individual adjustment of the algorithms to different problems if a comparison is performed? How do the results, and the fact that the classifiers were applied to all data sets without further 'tuning' relate to the No free lunch theorem? |

|

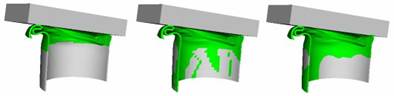

Simulation of a can being crushed.

On the left is ground truth, on the right are two attempts at predicting the crush zone. The region is correctly identified, which is most important for simulation designers/evaluators.

This is an example of large-scale data which could be distributed in ways that don't allow collection of all data in one place. |